-

Starship Factory

Photo shows four* Starship Boosters at various stages of assembly inside one of two Mega Bays at the SpaceX Build Site. The two Mega Bays – one for ships, one for boosters – are adjacent to the Starship Factory in Boca Chica, Texas.

A “Super Heavy” booster has very little internal structure. It’s mostly just a liquid oxygen tank stacked atop a liquid methane tank. At the bottom of the methane tank are the 33 engines, and at the top of the oxygen tank are the “grid fins” and the motors that operate them. A pipe runs down from the bottom center of the upper tank through the middle of the lower tank to carry oxygen to the engines. All of the other piping and electronics (and the self-destruct explosives) are attached to the outside.

* The items on the far left and far right will be parts of the same booster.

-

New Mathematical Reasoning AI from Google

A new AI system from Google adds a skill that Chat GPT lacks: logical reasoning. It recently outscored most human entrants on Intl. Math Olympiad problems, demonstrating that it can construct lengthy logical arguments that hold up to scrutiny.

Large Language Models like Chat GPT can generate gushers of plausible explanations but can’t tell if they’re right or wrong. Adding the new reasoning capabilities to the existing generative ones could be the next big step forward.

As usual, Sabine Hossenfelder explains it better than I could:

-

AI Not Understanding

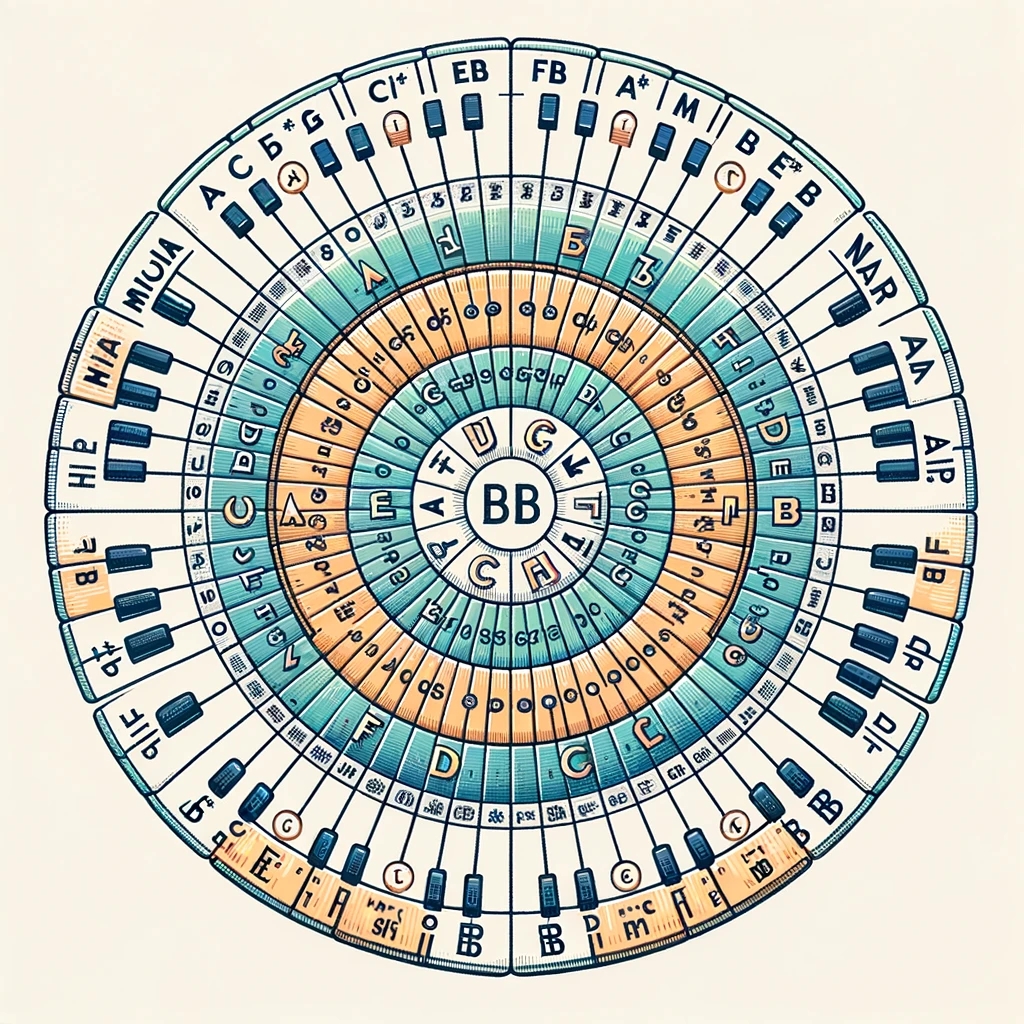

I asked ChatGPT about the Circle of Fifths, and it gave me a good general description. Then I asked it to illustrate (using DALL-E, also from OpenAI) and it produced this colorful monstrosity. This captures some superficial graphic similarities to other representations of the Circle of Fifths but completely misses on every level of detail … and it doesn’t know that anything is wrong.

To me this illustrates the vast current differences between how people think and what current generative models are doing. We tend to think it’s almost human-like until it does something like this.

-

ChatGPT Discussion at Semantic Arts

A presentation and discussion at Semantic Arts led by Steven Chalem.

-

“It’s useless, someone who agrees with you.”

Physicist Carlo Rovelli is asked about his disagreements with friend and frequent collaborator Lee Smolin. He quotes Smolin as saying, “If both of us had the same ideas, one of us would be superfluous,” then comments, “It’s useless, someone who agrees with you.”

(A brief note about the substance of their disagreement: As Rovelli describes things, he and Smolin agree that happenings (physical events) are more basic than things. But Smolin believes that the past and future are fundamentally different while Rovelli does not.)

-

ChatGPT-4: The Chat, the G, the P, the T, and the 4

What is ChatGPT-4? I’ll break it down.

“Chat” is for Conversation

The “Chat” part indicates that ChatGPT-4 is a conversational interface to an AI model (namely, the model GPT-4). An AI model is a type of software that processes examples in some area (in this case, words on the internet), finds patterns, and uses those patterns to make predictions or decisions. In addition to the huge corpuses of text that ChatGPT uses as examples, it was also trained on how conversations work – through “Reinforcement Learning with Human Feedback”.

Please give very brief responses. This is for a blog post.

Sure, I’d be happy to provide brief responses. Please proceed with your questions.

Upper Volta!

Upper Volta was the former name of Burkina Faso, a landlocked country in West Africa. The name changed in 1984.

Capital?

The capital of Burkina Faso is Ouagadougou. G is for Generative

GPT-4 is a “generative” model; it generates text. Some AI models recognize faces, some drive cars, some generate images or music. GPT-4 generates text.

Please write one short marketing slogan for turnips.

Turn Up with Turnips: Unbeatable Flavor, Unbelievable Health! P is for Pre-Trained

Generating text in response to a prompt is the easy part. What makes it (relatively) easy is having on-hand a store of the patterns that were found in the training data.

That’s the pre-training. It takes a long time and massive computing resources to distill everything on the internet (and more) into a form that can be referenced for quick responses to prompts. For each version of ChatGPT, it’s only done once.

That’s why some of ChatGPT’s knowledge is stuck in September 2021.

Who is King Charles III of the United Kingdom?

As of my knowledge cutoff in September 2021, Charles III has not ascended to the throne of the United Kingdom. The current monarch is Queen Elizabeth II and her heir apparent is Charles, Prince of Wales. If he ascends to the throne, he could choose the name Charles III or another regnal name. T is for Transformer

I’ll let ChatGPT handle this one.

In AI, what is a Transformer? Please provide a very brief explanation at a college freshman reading level. Focus on parallel processing.

[Long explanation]

that was great, but not brief enough

Sure, let me make it simpler:

A Transformer is a type of AI model used to understand language. It’s special because it can process all words in a sentence simultaneously (parallel processing) and figure out which words are most important (using “attention”). This makes it faster and smarter at understanding context.4 is for the moment

ChatGPT-4 is the fifth publicly-released version of ChatGPT-4 (there was a 3.5). The biggest surprises came with ChatGPT-3, when natural-sounding conversations began to emerge. Getting there from ChatGPT-2 was the result of many incremental improvements plus a much larger model. (The size of the model determines how detailed the patterns found will be.)

ChatGPT-3.5 brought more natural conversation and many other improvements. It is still more widely used than ChatGPT-4.

There’s a lot of talk already about ChatGPT-5, where OpenAI is currently working. Researchers may be reaching the limits of how much electrical power and cooling it’s feasible to consume for this purpose. I’m guessing it will take another conceptual innovation on the scale of Transformers to build something as surprising as ChatGPT-4.

What will be the next big innovation in large language models?

Predicting future innovations is always speculative, but a few potential directions include:

More efficient training and inference: Reducing the computational resources required to train and use these models.

Better understanding and reasoning: Improvements in a model’s ability to comprehend complex contexts, generate more accurate responses, and handle logical reasoning tasks.

Multimodal capabilities: Integrating text with other types of data like images or audio, enabling the model to understand and generate content across different formats.

Ethics and fairness: Advances to address bias in AI, make its decisions more transparent, and align its behavior with human values.

Remember, these are just possibilities based on the state of AI as of 2021, and the actual future could look quite different!The text inside the dialog boxes is copied verbatim from chat sessions. Some dialog boxes show the beginnings of sessions and some show continuations of sessions. None of the results are “cherry-picked.” That is, the responses shown are the first responses ChatGPT made to the given prompts.

I wrote the parts outside the boxes, with research assistance and ideas from ChatGPT-4.

-

Marx, Nietzche, and Kierkegaard on Dippin’ Dots – One Year Later

One year ago I asked ChatGPT 3 a question. Today I asked Chat GPT 4 the same question (with an added guideline).

What would Karl Marx, Friedrich Nietzsche, and Soren Kierkegaard say about Dippin’ Dots? Answer in 100 words or less using vivid language.

Chat GPT 4.0

Marx might view Dippin’ Dots as an embodiment of capitalism’s unending quest for novelty, representing commodified pleasure while obscuring labor exploitation. Nietzsche could see them as a symbol of the Will to Power, a triumph of human creativity and a defiance of traditional ice cream norms. Kierkegaard, reflecting on life’s paradoxes, could liken Dippin’ Dots to existential angst: sweet and fleeting, causing us to question our concepts of ice cream and of reality itself.

-

Coffee Shop Ontology by ChatGPT

Model: GPT-4

Current generative AIs like GhatGPT 4.0 can’t do my job yet, but they can handle the easy parts impressively.

design an ontology for a small independent coffee shop

Sure, I can help you create a basic ontology for a small independent coffee shop. An ontology typically consists of classes, attributes, and relationships.

- Classes:

- CoffeeShop: This would represent the coffee shop itself.

- Employee: This class would represent people who work at the coffee shop.

- Customer: This class would represent the customers who visit the coffee shop.

- Product: This class would represent the various products sold at the coffee shop.

- Supplier: This would represent the various suppliers of raw materials.

- RawMaterial: This would represent the various raw materials required to make the products.

- Order: This would represent the customer orders.

- Attributes:

- CoffeeShop: Name, Location, Opening hours, Employee list, Product list, Supplier list

- Employee: Name, Employee ID, Job Role, Working hours

- Customer: Name, Customer ID, Order history, Favourite product

- Product: Name, Product ID, Price, Ingredient list, Supplier

- Supplier: Name, Supplier ID, Supply product, Contact details

- RawMaterial: Name, Raw Material ID, Supplier, Cost

- Order: Order ID, Customer, List of products, Total cost, Time of order

- Relationships:

- CoffeeShop – Employee: “Has” relationship (A CoffeeShop has Employees)

- CoffeeShop – Product: “Sells” relationship (A CoffeeShop sells Products)

- CoffeeShop – Supplier: “Contracts with” relationship (A CoffeeShop contracts with Suppliers)

- Employee – CoffeeShop: “Works at” relationship (An Employee works at CoffeeShop)

- Customer – Order: “Places” relationship (A Customer places an Order)

- Order – Product: “Contains” relationship (An Order contains Products)

- Product – RawMaterial: “Requires” relationship (A Product requires RawMaterials)

- RawMaterial – Supplier: “Is supplied by” relationship (A RawMaterial is supplied by a Supplier)

- Supplier – CoffeeShop: “Supplies to” relationship (A Supplier supplies to CoffeeShop)

- Product – Supplier: “Is supplied by” relationship (A Product is supplied by a Supplier)

This is a very basic ontology for a coffee shop, but you could expand it further depending on your requirements. You could add additional classes like “Equipment”, “Sales”, or “Loyalty Program”, for example, and you could add additional attributes to your existing classes. For example, for “Customer” you might want to track their preferred payment method, and for “Employee” you could track their training or certification levels.

- Classes:

-

SpaceX Starship Launch Aftermath

-

ChatGPT-4 on Unifying Semantic and Dimensional Models

This is part of a longer conversation.

What if I already have an OWL ontology and a populated triple store with billions of triples? How can I present denormalized dimensional data to SQL Query writers and report developers?

ChatGPT

If you have a populated triple store with billions of triples and an OWL ontology, that’s a great start! However, bridging the gap between RDF triples (the native data model for triple stores) and denormalized dimensional data that SQL users are accustomed to can be a challenge.

Here are the steps you might follow:

- Define Your SPARQL Views: Write SPARQL queries that extract data from your RDF triple store in a way that aligns with your denormalized dimensional model. This could mean creating SPARQL CONSTRUCT queries to generate new triples, or SELECT queries that result in a table-like format. For instance, each row might represent a fact, with columns for each dimension and measure. These queries should be designed according to your OWL ontology and the needs of your report developers.

- Create Virtual SQL Views: Once you have your SPARQL views, use a technology that can create a SQL view on top of the SPARQL query results. This makes the results of your SPARQL queries look like traditional SQL tables or views. Technologies that can do this include various RDF-to-SQL mapping tools, SQL query federation tools, and certain triple store technologies. For example, OpenLink Virtuoso is a database that supports both SPARQL and SQL queries, and can create SQL views on top of SPARQL results.

- Documentation and Training: Once you have your SQL views in place, it’s important to provide good documentation and training for your SQL users. This should include an explanation of what each view represents, how the data in the view maps to your ontology, and any limitations or caveats that the users should be aware of. Users should also be trained in how to write SQL queries against these views, and how to interpret the results.

- Provide Support: Finally, ensure you have a process in place to provide ongoing support for your users. This could include answering questions, fixing bugs, and making enhancements to your views or ontology as needed.

Remember that presenting RDF data in a SQL-like way can involve some compromises. Not all aspects of your RDF data or OWL ontology may be accurately represented in a SQL-like view. In such cases, it might be beneficial to train some of your more advanced users in SPARQL and the concepts of RDF and OWL, so they can directly query and analyze your triple store when necessary.

Regenerate response

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.